Premise

Have you ever received a CAB (change advisory board) submission only for it to be deferred? A key element was absent or an important aspect not considered?

The idea here is to build a basic proof-of-concept workflow that reviews CAB changes prior to them reaching CAB - giving the author an opportunity to revise their submission and making it more likely to succeed.

An LLM wont have all the context it needs nor a perfect understanding of your environment. That’s okay - we strive to build a workflow with a set of operating principles and guidelines that guide the model to produce useful feedback and strengthen the change control process.

The aim is not to completely remove humans from the process. Human in the loop is integral and will have final decision authority.

I’d consider this workflow a success if it leads to refined submissions, more expedient processing of changes, and an overall stronger change control process.

Nor is it limited to CAB - the basic principles here would work for any review workflow, I imagine.

This work can serve as a starting point for a broader and more capable AI agent later down the line.

I’ll be using..

..to make this work. If you lack the hardware to run models locally, then ollama components can be easily swapped out for an LLM API providers such as OpenAI

, Anthropic

, Google

, or another one of your choosing.

Designing a workflow

Let’s consider, broadly, the approaches we can take:

- Single-pass

- Regular model with tuned system prompt

- CoT model (chain-of-thought) built-in

- RAG with environmental context

- Multi-pass

- Regular model with tuned system prompt

- CoT model (chain-of-thought) built-in

- RAG with environmental context

- Role-playing

- Agentic

- RAG with environmental context

- Role-playing

- Tool or function calling

Additional considerations:

- System Prompt Tuning:

- does a highly detailed system prompt produce better outcomes, is there a point of diminishing returns?

- how closely can a model ahere to instructions and response formats?

- Retrieval-Augmented Generation (RAG):

- can environmental context - ie infrastructure code, documentation, emails - impact quality of the evaluation?

- can a model even make sense of all the data we’re throwing at it?

- can additional context lead to better evaluation or just more nit-picking and repetition?

- Role-playing:

- does simulating the perspectives of stakeholders improve the overall quality of the analysis? ie a simulated interaction between an engineer and a manager.

- adversarial models - one model tries to aggressively justify the change to go ahead, whilst the other argues for it to be rejected. Does that lead to better discovery of edge cases?

- Agents:

- realistically, how far can we break down a task before we see diminishing returns?

- can multiple tuned experts produce better results than just a clever system prompt?

- Tool Use:

- what kind of tools are available to the LLM and how can they expand its reach?

- is there a limit to how useful tools can be? Beyond various means of information retrieval, do we want an agent to have the capability to independently test a change? Would that even be possible or are we encroaching on the responsibilities of engineers?

- Context window:

- can we fit all the relevant information and supporting evidence in-context?

- is there a trade-off to be made between cheaper models vs more capable models? probably not at the scale we’re working with.

- RAG helps when data to be evaluated exceeds context window, but then again - can a model make sense of all that data?

- Model Selection:

- Does using different LLMs produce better results?

- are there any biases or weaknesses in specific models that can be overcome by mixing multiple models at different stages of the analysis?

To keep things manageable, I’m going to start off with a multi-pass approach, using Microsoft’s Phi4 model

. I’m going to leave out RAG, role-play and tool-calling agents for later iterations.

Prompt tuning

To let us iterate faster, I’m including a CAB generator - just another LLM call with a custom prompt - to generate unique CAB submissions on each run. This will give me a large variety to test with, I’m hoping that it will help me ascertain the effectivness of the workflow across a wide spectrum of inputs.

The workflow

This is the logcal flow at a high level. In my testing, substitute “human” for an LLM generating inputs.

We can swap between regular, CoT, and other models to see what kind of results we get.

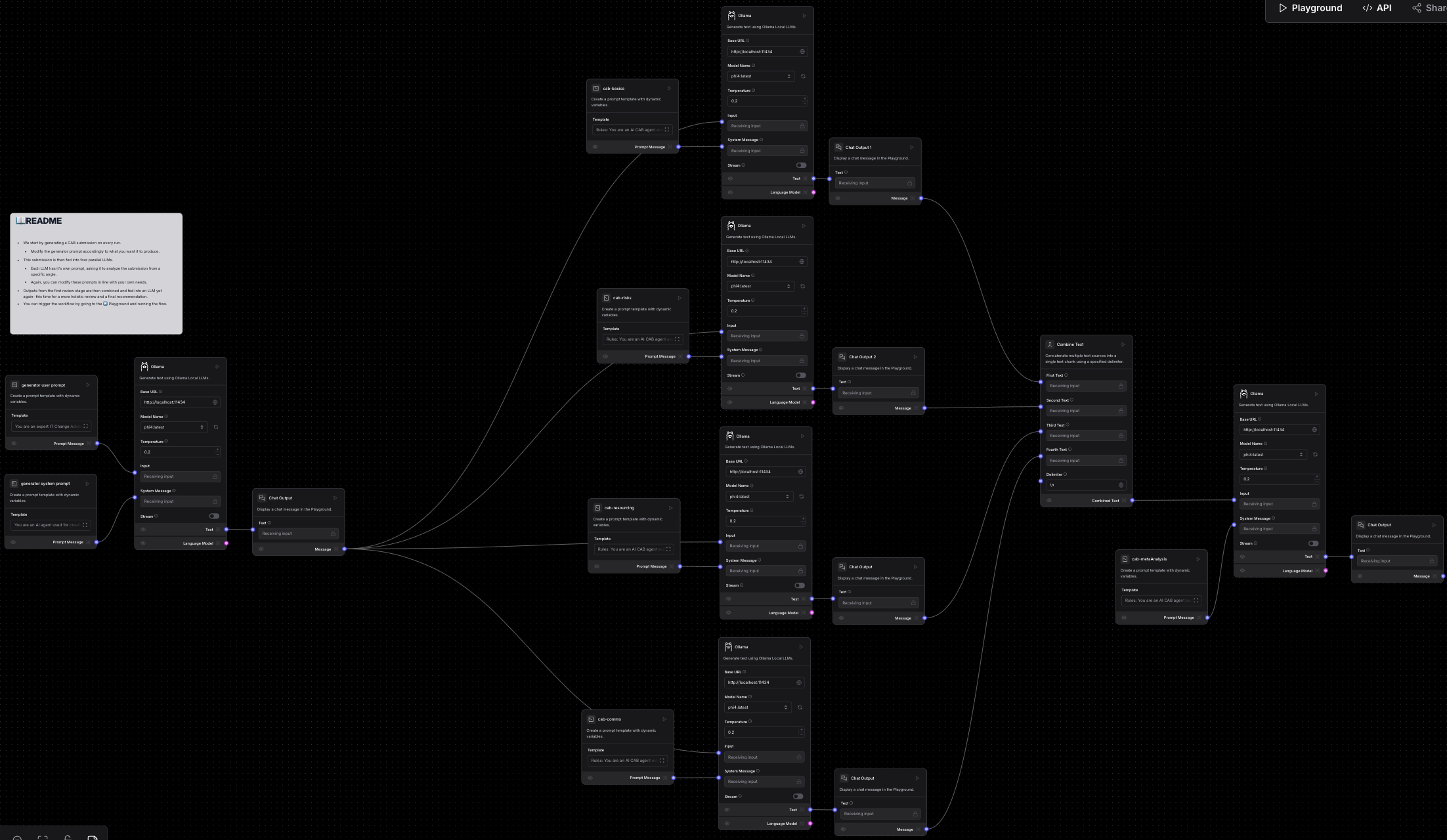

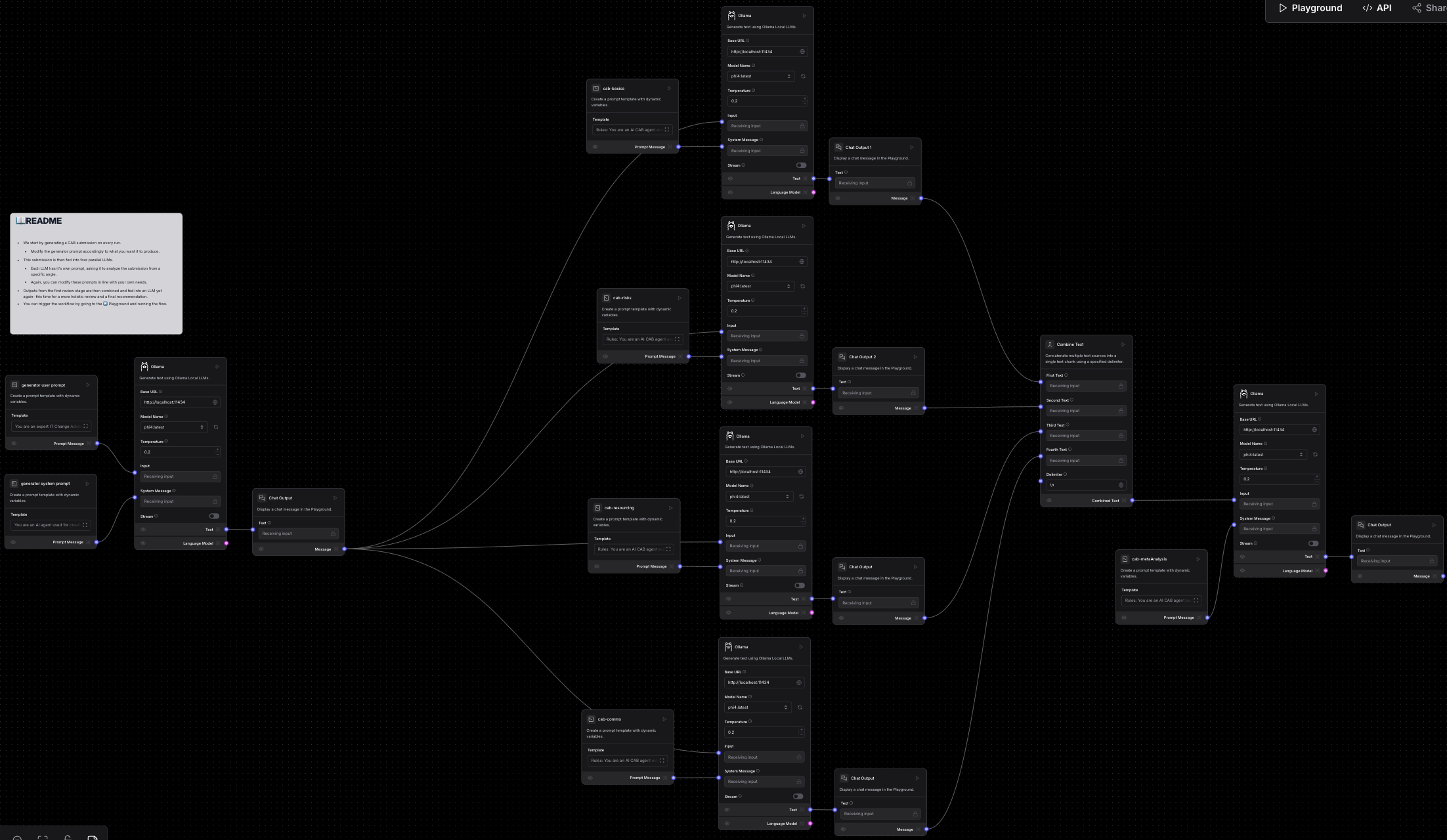

Langflow Implementation

- Langflow 1.1.1 running in docker

- ollama running on a server in my network

- Phi4 large language model from Microsoft.

Workflow floorplan in Langflow:

With everything set up, let’s run the workflow and..

Run through the results

First, model generates a change..

Change Title: Implementation of Automated Backup System for On-Premises File Servers

Description:

This change involves the deployment of an automated backup solution for our on-premises file servers to enhance data protection and disaster recovery capabilities. The new system will utilize a third-party tool, “BackupPro,” which supports incremental backups, encryption, and cloud storage integration.

Reason for Change:

The current manual backup process is time-consuming and prone to human error. Implementing an automated solution will ensure regular, reliable backups, reduce administrative overhead, and improve our ability to recover data quickly in the event of a failure or disaster.

Impact:

- Systems Affected: On-premises file servers (FS01, FS02, FS03)

- Services Affected: File storage services used by HR, Finance, and Operations departments

- Users Affected: Approximately 200 employees across multiple departments

Level of Detail:

The change request includes a high-level overview of the solution architecture, integration points with existing systems, and a brief outline of the configuration settings. Detailed technical specifications will be provided in an appendix.

Urgency:

This is scheduled as part of our quarterly IT improvement plan. The implementation should occur within the next 60 days to align with our data protection policy review cycle.

Risk Level:

Medium risk due to potential disruptions during initial setup and integration testing. However, thorough planning and a phased rollout will mitigate these risks.

Backout Plan:

- Step 1: Revert configuration changes on affected servers.

- Step 2: Restore previous backup routines temporarily until the issue is resolved.

- Step 3: Conduct a root cause analysis to prevent recurrence.

Test Plan:

- Pre-Implementation Testing: Validate BackupPro installation and configuration in a test environment.

- During Implementation: Monitor system performance and log any errors during initial backups.

- Post-Implementation: Verify backup integrity by performing a full restore on a test server.

Maintenance Window:

The implementation will occur over two weekends, with the first phase focusing on setup and testing (Saturday-Sunday), followed by the second phase for final deployment and validation (Saturday-Sunday).

Target Audience:

This change request is prepared by the IT Operations Manager, reflecting a balance between technical detail and strategic overview suitable for both technical staff and management review.

..it reviews the basics..

Evaluation:

What is the proposed change? ✅

The submission clearly outlines the implementation of an automated backup system using “BackupPro.”

Why is this change necessary? ✅

It addresses issues with the current manual process, highlighting time consumption and error potential.

What are the expected benefits? ✅

Benefits include enhanced data protection, reduced administrative overhead, and improved disaster recovery capabilities.

What systems/services will be affected? ✅

The submission specifies on-premises file servers and services used by HR, Finance, and Operations departments.

How complex is the change? ✅

The complexity is acknowledged as medium risk with a phased rollout plan to mitigate potential disruptions.

What is the proposed implementation plan? ✅

A detailed two-phase implementation over weekends is provided, including setup, testing, deployment, and validation steps.

What is the proposed rollback plan? ✅

A clear backout strategy is outlined, involving reverting configuration changes and restoring previous backup routines if necessary.

Overall, this change submission is well-detailed and meets all evaluation criteria. No further recommendations are needed at this stage.

..then the risks..

Evaluation:

- What is the potential impact on users/customers? ✅

- Positive impact: Improved data protection and reduced risk of data loss.

- Minimal disruption expected during scheduled maintenance windows.

- What are the potential risks? ❌

- Submission lacks detail on specific risks such as compatibility issues with existing systems or potential downtime during integration.

- What is the likelihood of these risks? ✅

- Medium risk acknowledged, but specific probabilities for identified risks are not provided.

- What is the impact if these risks occur? ❌

- Impact assessment missing; should include potential data loss duration and service disruption extent.

- What are the mitigation plans for these risks? ✅

- Phased rollout and thorough testing are mentioned as mitigations, but specific actions for identified risks are not detailed.

- Are there dependencies on other changes/projects? ✅

- No dependencies listed; however, confirmation from relevant departments would strengthen this section.

- What is the proposed testing plan? ✅

- Comprehensive pre- and post-implementation testing outlined.

- Are there any contradictions in the change? ✅

- No contradictions identified within the provided information.

Recommendations:

- Potential Risks: Provide a detailed list of potential risks, including compatibility issues and downtime scenarios.

- Impact Assessment: Include an impact assessment for each risk to clarify consequences if they occur.

- Mitigation Plans: Expand on mitigation strategies with specific actions tailored to each identified risk.

Overall, the change submission is well-structured but requires additional detail in risk management areas to ensure comprehensive evaluation and planning.

..then resource implications..

Evaluation Against Criteria:

- What resources are required? ❌

- Recommendation: Specify personnel involved (e.g., IT specialists), tools needed (e.g., BackupPro licenses), and budget allocation.

- Any special skills required? ✅

- The change involves IT professionals familiar with backup solutions and server management.

- What is the proposed start and end date/time? ❌

- Recommendation: Provide specific dates for each phase of implementation, including setup, testing, and deployment.

- What is the maintenance window (if applicable)? ✅

- The change specifies a two-weekend maintenance window for implementation.

- Is out of hours work necessary? ✅

- Implementation will occur over weekends to minimize disruption.

- Who is responsible for implementation and verification? ❌

- Recommendation: Identify specific team members or roles responsible for each phase, including setup, testing, and final deployment.

- Are there any contradictions in the change? ✅

- No apparent contradictions are present in the current submission.

This evaluation highlights areas needing clarification to ensure a smooth approval process and successful implementation of the automated backup system.

.. communications plan and approvals..

Evaluation Against Criteria:

- Who needs to be informed?

- Stakeholders: Department heads of HR, Finance, and Operations

- Users: Employees using file storage services

- Support: IT support team

- Why do they need to be informed?

- To ensure awareness of potential service disruptions during implementation.

- To prepare for any changes in backup procedures or access.

Has this been discussed with relevant teams/individuals?

❌ The submission does not specify discussions with the IT support team or department heads.

Who is the business owner/sponsor?

✅ The IT Operations Manager is identified as responsible for overseeing the change.

Are there financial implications?

- Yes, purchasing and licensing BackupPro software incurs costs.

- Potential cost savings from reduced manual backup efforts should be quantified.

- Are there any contradictions in the change?

✅ The proposal is consistent with stated goals of improving data protection and reducing administrative overhead.

Recommendations:

- [3] Discuss implementation details with IT support and department heads to ensure alignment and address potential concerns.

- Clarify financial implications, including a cost-benefit analysis, to justify the investment in BackupPro.

- Include a detailed appendix with technical specifications for review by IT staff.

.. and now collate responses, do another review, and generate a recommendation:

LLM Assistant - CAB Evaluation

<change_number>

Implementation of Automated Backup System Using “BackupPro”

Are there any contradictions?

- No contradictions are identified within the provided information.

What are the risks and their likelihood?

- Potential risks include compatibility issues with existing systems, potential downtime during integration, and unspecified specific probabilities for these risks.

- The likelihood of these risks is acknowledged as medium risk, but detailed probabilities are not provided.

Summarize areas of the change that need improvement:

- Detailed identification of specific risks such as compatibility issues or potential downtime scenarios is needed.

- An impact assessment should be included to clarify consequences if identified risks occur.

- Mitigation plans require expansion with specific actions tailored to each identified risk.

- Resources required, including personnel, tools, and budget allocation, need specification.

- Specific dates for each phase of implementation are necessary.

- Identification of team members or roles responsible for each phase of the change is needed.

- Discussions with IT support and department heads should be confirmed.

Recommendation:

- The change can proceed but requires additional detail in risk management areas to ensure comprehensive evaluation and planning. Addressing these gaps will help mitigate potential issues during implementation, ensuring a smoother transition to the automated backup system.

Setup

If you want to try any of this yourself, I’ve uploaded a docker compose file and the Langflow template to a GitHub repo.

Follow the steps below to reproduce my setup. I’m assuming you’ve got docker and ollama installed already.

git clone https://github.com/MRKups/llm-workflow-cab-1.git, or download from webcd llm-workflow-cab-1docker compose up -d- Open up a terminal,

ollama serve - Open http://localhost:7860/

- Select New Flow, Blank Flow

- Click on flow title (top center), Import

- Import

cab-workflow-1.json - Run the workflow

Overall, I’m quite pleased with how this turned out. While the quality of the final output is heavily dependent on the quality of the model you are using, the results produced by Phi4 are very good. Phi4 was able to extract all the relevant information from the change and evaluate it, correctly highlighting weakness areas and calling attention to unaddressed risks.

Large language model workflows and ‘AI’ agents are opening up a new frontier, bringing the next wave of automation and creating new opportunities. Let’s remember - models and integrations will only improve as time goes on, enabling new use cases and applications.

I hope this got you thinking about how these tools can be applied to everyday problems and what else may be possible with time.